Trust is the crucial fulcrum for any AI leverage

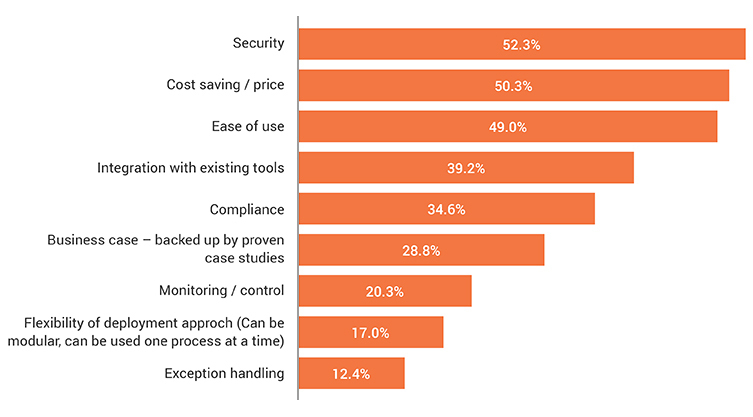

An HFS study on machine learning (ML) adoption shows that more than 50% of respondents indicate that proven security is critical to gaining their trust enough to use ML (see Exhibit 1). Only when enterprise users trust AI-ML solutions to be safe and secure will they adopt these transformational technologies.

When AI-ML enterprise solution architects or designers craft an AI solution focusing on human trust, human users need to be at the center, not algorithms. Otherwise, as Franz Kafka said, the cages (the AI algorithms) will seek the birds (the users), who will become suspicious and fly away. We’ve identified five critical practices to help you avoid caging—and spooking—your users.

Exhibit 1. What proof points do enterprise leaders need to see before using ML?

Which of the following would ML need to demonstrate before your organization considers using it? (N=153)

Sample: 153 senior level executives from organizations with $1 billion or more in revenues

Source: HFS Research, 2018

Follow these five practices to design trustworthy AI solutions keeping the user at the center

Here are five essential design for trust (DfT) practices:

1. Start with the users’ perceptions and worries about the problem AI will solve.

Shrinkage detection is a typical AI-ML use case for retail. Shrinkage typically means loss of revenue, business, and inventory due to employee theft, shoplifting, administrative error, vendor fraud, or damage during transit or in the store. To build this use case, start with putting a typical shop manager—the user persona—at the center, and analyze how shrinkage and security concerns affect a shop manager’s day, performance, productivity, and business contributions. Don’t start the use case design activity with decisions like which algorithms (e.g., random forests) to use to detect shrinkages as anomalies. Algorithm consideration occurs during the implementation phase, not during design.

2. Showcase both functional and behavioral safety and security aspects transparently and understandably.

Security is a necessary element for building trust. While designing security in AI solutions can require technical discussions, there is no point in throwing around tech jargon during the business-application-based design phase, such as during focus groups with users. It’s far more relevant to share with the users the functional and behavioral security requirements of the targeted business problem. Sharing requirements with users is especially important during the initial design phase; if you are using design thinking methodology like the Double Diamond approach, you must engage deeply with users during the first diamond phase where detailed analysis is carried out on the target problem, root causes, and associated security concerns.

3. Involve social scientists, psychologists, behavioral sciences experts, and even neuroscience experts if required, during the early stages of solution design.

In the retail shrinkage example, how do different shop managers react or behave when a case and the guilty parties are identified? Different human decision makers and action agents operate at varying levels of empathy and emotional quotients. Some shop managers may react instantly and exactly follow the company rulebooks; they want to proudly show higher management their efficiency. Some others may try to understand why an employee behaved how he did—whether with entirely mala fide intentions or inadvertently, for example, and similar factors. There can be a neuroscientific viewpoint, too—what if the guilty party suffers from some compulsive behavioral disorder like kleptomania and a rash treatment aggravates her condition, potentially exposing the organization to subsequent legal complications regarding the legal protection of employees with disabilities. The autonomous AI solution, if it must virtually emulate a shop manager’s persona across this broad spectrum, may not only have to detect the shrinkage but also identify the lacuna and the potential causes or triggers. Then it must recommend the most appropriate responses on a case-by-case basis to ensure a fair, safe, and humane resolution.

4. Include explainability, interpretability, and transparency in the solution design.

To design the example AI solution for shrinkage detection and the recommended responses, developers must factor explainable AI (XAI) algorithms and techniques into at least these two critical steps:

- Why did the system identify a particular incident as a potential anomaly or a shrinkage? What parameters and values form the basis of the decision? In decision-tree-based models, it is not difficult to check for values backward through the branches and nodes of trees and then understand why a system detects a specific sequence as an anomaly.

- Why did the autonomous action recommender identify a specific incident in a specific case? Answering this question is a far harder problem because it involves explainability from a potentially large and unstructured text corpus such as company rulebooks and policy documents. This module will likely use a deep learning algorithm like the long short-term memory (LSTM) network to classify and search for the best-fit actions for an identified case, along with heatmaps to identify the most significant words or phrases, for explainability. Here, algorithms like layer-wise relevance propagation (LRP) will probably be more effective than other techniques like sensitivity analysis or deconvolutional methods.

Also, both local and global explainability—across the nodes and the paths to reach them—will have to be considered in this simple and well-defined use case.

5. Design AI solutions with a variety of modes and options so that the solution can offer a truly digital, mass-personalized experience to each user.

Personalization is critical because the evaluation of “trust” is personal. Humans trust other humans on a case-by-case basis. There is no empirical behavioral formula to make any human trusted by all humans; the same constraint also applies to AI solutions. That’s why any AI solution has to have multiple smart and intuitive ways to earn different users’ trust at the individual level; users can interact with a solution the way they feel most comfortable.

Bottom line: Designing for trust is a must for successful AI solution adoption

Trust and security are intertwined, and AI solutions are no exception. The complexity of technologies involved in an AI solution doesn’t render this domain naturally trustworthy for its human users. Following the five essential practices of designing for trust is essential to designing successful AI solutions.